Semantic Search with Machine Learning, TypeScript, and Postgres

Make your search smarter with machine learning.

The ever-growing abundance of information on the internet has made search engines indispensable. A key challenge for modern search engines is to deliver relevant search results by understanding the intent behind users' queries. In this article, we will explore semantic search, a powerful technique for improving search results, and learn how to implement it using machine learning, TypeScript, and Postgres.

This article is targeted at developers who want to learn how to implement semantic search in their applications. It assumes basic knowledge of TypeScript, Python, and SQL. Although this heavily relies on machine learning techniques, it is not assumed that you have any prior knowledge of machine learning. I will explain the necessary concepts as we go along and promise to never use any fancy math formula. Instead, I will express everything in plain English or simple code.

The code below is also available on GitHub as a small CLI application. See github.com/marcj/typescript-semantic-search.

Semantic Search vs Traditional Search

One of the key features of databases in general is their built-in text search capability. Traditional search relies on pattern matching, using operators such as LIKE or regular expressions, and full-text search, which is more efficient and offers a better ranking of search results.

However, traditional search methods have limitations. They rely heavily on exact keyword matches, which can lead to irrelevant results if the user's query does not contain the exact keywords or phrases present in the indexed data. While traditional search methods can be effective for simple queries, they are not well-suited for more complex queries that require a deeper understanding of the user's intent. Imagine a big e-commerce website that sells a wide variety of products. It is crucial for the search engine to provide relevant results for queries, even if that means that the engine has to search for synonyms or related terms.

Semantic Search: A Smarter Approach

Semantic Search aims to address the limitations of traditional search methods by understanding the intent behind users' queries and returning more relevant results. Modern approaches do this by leveraging natural language processing (NLP) and machine learning techniques to analyze and comprehend the meaning of words and phrases within a given context.

Semantic search considers factors such as synonyms, word order, and the relationships between words to better understand the user's query. This allows it to return results that may not contain the exact keywords used in the query but are still relevant to the user's intent.

For instance, let's examine the query below:

query="smart home security system with voice control."A conventional search engine might display results that match the exact keywords "smart home", "security system" and "voice control", or more fine-grained matches such as each keyword individually.

A semantic search engine, on the other hand, would understand the query's intent and provide results for smart home security systems equipped with voice control capabilities, even if they don't explicitly mention the phrase "smart home", "security system" or "voice control". By leveraging semantic understanding like synonyms, the search engine can offer more accurate recommendations for users looking for specific features in their smart home security setup.

By understanding the meaning behind queries and considering the relationships between words, semantic search provides users with more relevant and accurate search results, improving the overall search experience.

Current state-of-the-art semantic search engines are based on machine learning models that were trained on large amounts of text data. These models are known as language models, more concretely they are called embedding models. The name comes from the fact that they take text as input and produce a vector of numbers (the embedding) as output.

These models can understand the meaning of words and phrases within a given context and can be used to generate embeddings for text documents. These embeddings can then be used to compare the similarity between two documents or between a document and a query. With a document a simple sentence or a small paragraph is meant.

To understand how and why this works, it's important to take a closer look at embeddings.

Sentence Embeddings

A sentence embedding is a vector representation of a sentence or small paragraph. It is a mathematical representation of the meaning of the content. These machine learning models learn to output embeddings that are similar to sentences that have a similar meaning. So if we have two sentences that are similar in meaning, their embeddings will be similar as well, which does not necessarily mean that the numbers in the vectors are close to each other, but rather that the vectors point in the same direction. But for the sake of simplicity we say they are similar.

Let's see an example:

sentence1 = 'A cat is chasing a mouse.'

embedding1 = [0.11, 0.20, 0.31, 0.44, 0.51, 0.69, 0.03]Running the sentence through the model produces an embedding like the one above. In reality, the embedding is much more complex and has a dimension of 384 (384 numbers in it), sometimes less and sometimes even more, but for the sake of simplicity we use a 7-dimensional embedding in our examples here.

So the embedding is a vector (an array) of 7 numbers in this case. A single number in it is not directly related to a word, since no matter how many words your sentence has, the embedding is always the same size. So the embedding is not a list of numbers for each word in the sentence, but rather a list of numbers that represent various features or properties of the sentence.

In addition to compressing the information of the sentence in these numbers, the model has also learned to output embeddings that are similar to sentences that have a similar meaning. So if we have two sentences that are similar in meaning, their embeddings will be similar as well.

For example:

sentence2 = 'A cat is chasing a dog.'

embedding2 = [0.12, 0.21, 0.29, 0.4, 0.34, 0.68, 0.04]As you can see the numbers are very close to the embedding of sentence1 above, which means the vector points in the same direction. This means that the sentences are similar in meaning. In contrast to that, if we have two sentences that are not similar in meaning, their embeddings will be very different.

sentence3 = 'My meal tomorrow will be delicious.'

embedding3 = [0.91, 0.13, 0.01, 0.56, 0.23, 0.18, 0.1] As you can see these numbers are very different compared to embedding2. This means that the sentences are not similar in meaning.

To calculate the similarity between two embeddings, we can use the dot product. The dot product is a function that takes two vectors and returns a single number. A dot product of two vectors is the sum of the products of the corresponding entries of the two vectors. For example, the dot product of [1, 2, 3] and [4, 5, 6] is 1*4 + 2*5 + 3*6 = 32.

def dot(v1, v2):

return sum(x*y for x, y in zip(v1, v2))

dot([1, 2, 3], [4, 5, 6]) # 32We can interpret the dot product as a measure of similarity between two vectors. The larger the dot product, the more similar the vectors are.

Since dot products could return very big numbers, we instead use a slightly different way of calculating the similarity between two embeddings. We use the cosine similarity, which is a number between -1 and 1. The cosine similarity of two vectors is the dot product of the two vectors divided by the product of the two vectors' lengths (or magnitudes). For example, the cosine similarity of [1, 2, 3] and [4, 5, 6] is

(1*4 + 2*5 + 3*6) / sqrt(1^2 + 2^2 + 3^2) * sqrt(4^2 + 5^2 + 6^2)

= 0.9746318461970762Let's write it down in Python:

from math import sqrt

def dot(v1, v2):

return sum(x*y for x, y in zip(v1, v2))

def cosine_similarity(v1, v2):

return dot(v1, v2) / (sqrt(dot(v1, v1)) * sqrt(dot(v2, v2)))

cosine_similarity([1, 2, 3], [4, 5, 6]) # 0.9746318461970762This way, the resulting numbers are better interpretable. If the cosine similarity is near 1, the two vectors are very similar. In fact, if the cosine similarity is 1, the two vectors are identical. The smaller the number, the more different the two vectors are.

Let's use the cosine similarity to compare the embeddings of our three sentences above:

# embedding1 = encode('A cat is chasing a mouse.')

# embedding2 = encode('A cat is chasing a dog.')

cosine_similarity(embedding1, embedding2) # 0.9890270804484543

# embedding1 = encode('A cat is chasing a mouse.')

# embedding3 = encode('My meal tomorrow will be delicious.')

cosine_similarity(embedding1, embedding3) # 0.5331167975175103As we can see, the cosine similarity of embedding1 and embedding2 is very high, which means that the sentences are similar in meaning. The cosine similarity of embedding1 and embedding3 is much lower, which means that the sentences are not similar in meaning.

Use an embedding model

In practice, to embed a sentence, you use a pre-trained read-to-use model, usually an open-source one. Although there are meanwhile also commercial APIs available, they are usually not better and thus not necessary to use. We will use in this article a pre-trained model that is freely available as open-source from Huggingface. Before we can use the model in our TypeScript application, we have to understand how it works in Python.

Let us take an example of a small database and a query we want to run against it. I'm using Python to demonstrate it for now, since the model and almost all semantic search libraries are written in Python.

from sentence_transformers import SentenceTransformer

embedder = SentenceTransformer('all-MiniLM-L6-v2')

database = [

'A bird is singing in the tree.',

'The boy is riding a bicycle.',

'A woman is painting a picture.',

'A cat is chasing a mouse.',

'A group of friends are playing soccer.',

'Two children are building sandcastles on the beach.',

'A dog is barking loudly in the park.',

'A chef is preparing a delicious meal.',

'A dolphin is jumping out of the water.',

'The sun is setting over the mountains.'

]

database_embeddings = embedder.encode(database)Ok, a lot of probably unknown stuff here. Let's go through it step by step.

First, we import the SentenceTransformer class from the sentence-transformers library. This library is a wrapper around the Huggingface Transformers library, which is a library for natural language processing (NLP) tasks. It provides a simple interface for using pre-trained models for semantic search (and other tasks).

In order to execute the above code, you need to install the sentence-transformers library. You can do this by running the following command in your terminal:

pip install sentence-transformersNext, we instantiate the SentenceTransformer class with the name of the model we want to use. In this case, we use the all-MiniLM-L6-v2 model, which is a pre-trained model for semantic search. This model was trained on more than 1 billion English sentences and has thus a good understanding of the English language. If you want to use it for another language, you can find a list of available models here.

In general, there are a lot of models out there and almost weekly new models are released. So if you want to use a model for a specific task, you should check out the Huggingface Model Hub to see if there is a model that fits your needs.

Next, we define a small database of sentences. This could be a database of products, blog posts, or any other kind of data.

Finally, we encode the sentences in the database using the encode method of the embedder to get an embedding. This method takes a list of sentences and returns a list of embeddings.

Query

Now that we understand what embeddings are and how they are generated, let us see how we can use them to find similar sentences in our small sentence database from above.

We have in database_embeddings all our embedding for each database entry. In order to query the database, we need to generate an embedding for the query as well. This has to be done for each new query. We can do this by using the embedder.encode method again.

query = 'A cat is chasing a dog.'

query_embedding = embedder.encode(query)Now that we have the embedding for the query, we can calculate the cosine similarity between the query embedding and each database embedding.

db = zip(database, database_embeddings)

scores = []

for sentence, embedding in db:

score = cosine_similarity(query_embedding, embedding)

scores.append((score, sentence))Score is now a list of tuples. Each tuple contains the cosine similarity score and the sentence.

(0.1724354434283575, 'A bird is singing in the tree.'),

(-0.021669311121845284, 'The boy is riding a bicycle.'),

(0.02662845781364533, 'A woman is painting a picture.'),

(0.7974124850155877, 'A cat is chasing a mouse.'),

(0.08126439373977194, 'A group of friends are playing soccer.'),

(-0.09995009845706435, 'Two children are building sandcastles on the beach.'),

(0.19601809634330147, 'A dog is barking loudly in the park.'),

(0.03997013026400708, 'A chef is preparing a delicious meal.'),

(0.08529057519644113, 'A dolphin is jumping out of the water.'),

(0.044714490752563216, 'The sun is setting over the mountains.')We can sort the list by the cosine similarity score and print the top 5 results. Remember, the higher the cosine similarity, the more similar the two sentences are.

scores.sort(reverse=True)

for score, sentence in scores[:5]:

print(f'{score:0.04f} {sentence}')which prints

0.7974 A cat is chasing a mouse.

0.1960 A dog is barking loudly in the park.

0.1724 A bird is singing in the tree.

0.0853 A dolphin is jumping out of the water.

0.0813 A group of friends are playing soccer.

As we can see, the top result is the sentence that is most similar to our query "A cat is chasing a dog.". The second result is the second most similar sentence and so on. But you can already see there is a big gap between the first and the second result. To not display unrelated (small score) results, we can filter out all results that have a cosine similarity below a certain threshold. What the threshold is depends on your use case. Best is to play around with various queries and then decide based on that.

threshold = 0.5

scores = [score for score in scores if score[0] > threshold] Note that the quality of the results depends on the quality of the sentence embeddings. If the sentence embeddings are not good, the results will not be good either. So if you are not happy with the results, you can try to use a different model or fine-tune the model on your own data. This is a bit more advanced and will be covered in a future article.

Re-Ranking

Now that we have a way to query our database and get similar results, we have a solid foundation already. The quality of the results is only as good as the embeddings itself. And here lies a problem. The embeddings are not perfect. They are good, but not perfect. So we can not rely on them 100%. We need a way to re-rank the results to make sure that the most relevant results are on top and unrelated results are at the bottom.

Re-ranking is a common technique in information retrieval that takes a list of say 100 results and re-ranks them based on some other criteria. In our case, we want to re-rank the results based on the similarity of the query and the result. The higher the similarity, the higher the result should be ranked.

Let's make a quick example. Let's say we have a query "A cat is chasing a dog." and we get the following results from our database.

0.7974 A cat is chasing a mouse.

0.3960 A dog is barking loudly in the park.

0.1913 A group of cats are chasing several dogs.

0.1724 A bird is singing in the tree.

0.0853 A dolphin is jumping out of the water.

The first result is the most similar result to our query, but the second should not be ranked that high. The third on the other hand is very similar to our query, but it is ranked very low. This is because our embeddings are not perfect, and it's not trivial to learn an embedding representation of all the possible sentences in the world. So we have to help the model a bit.

This is where cross-encoder come it. Cross-encoder are a special kind of model, just like our sentence embedder, but instead of encoding a single sentence, they encode a pair of sentences. So we can use a cross-encoder to encode our query and each result and then get a score for each pair. The higher the score, the more similar the two sentences are. We can then use this score to re-rank all the results and create a new list with new scores from this cross-encoder. By sorting this new list, we get a new, final ranking of our results.

Cross-encoders are usually much more powerful than sentence embedders, but they are also much slower. So we can not use them to encode all our database entries. We can only use them to encode the query and the top 100 or so results. This is usually enough to get good results, but it depends on your use case.

Re-Ranking, just like sentence embedding, can be done using free and open-source models. We do not cover this in this article, but you can find more information about this in the excellent documentation of sentence-transformers at Retrieve & Re-Rank.

Data Preparation

In the case of product data, blog posts, or other unstructured text data, we would have to prepare and convert our data first before we can run it through the model. Preparing here means we have to remove unnecessary white-space, any HTML and special characters, and chunk the text into smaller pieces and then encode each piece individually. Usually, these smaller pieces are sentences, but it's not always that easy.

The reason why we have to prepare our data is that we can not encode a big paragraph or a whole article into a single embedding. The model can only encode a single sentence or small paragraph at a time. That is what the model was trained on, so we have to stick to that. Depending on the model, the maximum number of words that can be efficiently encoded differs. If the sentence is too long, the model will simply truncate it, which leads to information loss. So it is important to prepare the data in a way that the model can handle it.

For a simple solution, we can just split the text into sentences, but this is not always an ideal solution. For example, if we have lots of very small sentences it would be hard to find the right embedding for it. Also, if we have a very long sentence, we would have to split it into multiple sentences first.

For the sake of simplicity, we will just split the text into sentences and encode each sentence individually. We will use the nltk library for this.

import nltk

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

def prepare(text):

sentences = sent_tokenize(text)

# strip all white-space from the sentences and remove empty sentences

return [s.strip() for s in sentences if len(s) > 0]

text = """

A cat is chasing a mouse! A dog is barking loudly in the park.

A bird is singing in the tree.

"""

sentences = prepare(text)Here is the result:

['A cat is chasing a mouse!',

'A dog is barking loudly in the park.',

'A bird is singing in the tree.']This prepare function is important when we want to embed our data. Keep it in mind,

it will be used later.

Database

In the example above, we used a small database (a simple array) with only a few sentences. In reality, we would have a much bigger database with thousands or even millions of sentences. In order to query such a big database, we need to use a more efficient way of calculating the cosine similarity between the query embedding and each database embedding.

If you have worked with popular databases like PostgreSQL, MySQL, or SQLite before, you might be wondering how these databases would calculate the cosine similarity of embeddings, let alone storing embeddings. The answer is, they can't. These databases are not designed to store and query embeddings.

Although embeddings are nothing else than vectors aka arrays of numbers, and they indeed can store arrays in one way or another, to query them efficiently, they need to be indexed. And that's where the problem starts. These databases are not designed to index long arrays. They are designed to index strings, numbers, and other data types, but not arrays in the way we need them for cosine similarity.

In the last months, we have seen a lot of new vector databases popping up. Why the name "vector" database? Because embeddings are vectors, modern semantic search with machine learning is all about vectors as we have learned.

Do you need any of these fancy new vector databases? No. We can use extensions to our well-known databases. After all, we don't want to reinvent everything dramatically new here. We have embeddings that need to be stored alongside our other data, and we need an operator to calculate the cosine similarity. This is compared to a feature set that for example, PostgreSQL offers a tiny new functionality that we need. We just want to have a new data type (embeddings) and a new operator (cosine similarity operator).

This is exactly what pgvector offers. It's a PostgreSQL extension that allows us to store and index embeddings and query them efficiently. It's open-source and free to use. You can find the source code on GitHub: https://github.com/pgvector/pgvector.

Note that how the database indexes the embeddings defines how well the results will be. Indexing embeddings is more complex than indexing simple data types like strings and numbers, hence we have more ways to configure the index. The default configuration is a good starting point, but you might want to tweak it for your use case, especially if you see performance degradation or bad results. I will probably cover this in a future article.

Install pgvector

To install pgvector you need to have PostgreSQL installed. If you don't have it installed yet, you can download it here: https://www.postgresql.org/download/.

git clone --branch v0.4.0 https://github.com/pgvector/pgvector.git

cd pgvector

make installThe extension is not loaded per default and needs to be enabled per database. To do that, execute the following SQL:

CREATE

EXTENSION vector;Now pgvector is installed and ready to use. To store embeddings, we need to create a table with a new vector column. The vector column is a new data type that is provided by the extension. It's a fixed-length array. The length of the array is defined by the dimension of the embedding. In our case, the dimension is defined by the model all-MiniLM-L6-v2, so we will use a dimension of 384. If you have a different model, you need to use the dimension of that model.

CREATE TABLE sentences

(

id SERIAL PRIMARY KEY,

sentence TEXT NOT NULL,

embedding VECTOR(384) NOT NULL

);Note that we also have a primary key column id and a column sentence that stores the original sentence. We need the id column to identify the sentence in the database. We store the original text alongside the embedding in the sentence column, so we can display it later.

Now that we have everything we need as basis we can switch to our actual application, written in TypeScript.

TypeScript to Python

Now it's time to switch to TypeScript. After all, TypeScript is predominantly used in the web and this article is about making semantic search available in your web application.

You might be wondering why TypeScript? Why not everything in Python? The answer is simple: JavaScript/TypeScript is the most popular language in the web, you can share code between backend and frontend, and it's the language I use for all my web projects. I want to make semantic search available to as many people as possible, and TypeScript is the best choice for that. Python is a great language, but it's not the language of the web. The only reason we have to deal with Python is that many models are currently only easily available in Python. But this will change in the future, and we will, sooner or later, have better integrations of these models in JavaScript/TypeScript without the need to use Python.

Before we can interact with the database, we need to find a way to talk to our model in Python to encode our sentences as embeddings. There are several solutions to this problem, but we will use a library called pybridge I've used now several times in my projects which makes it very easy to interact with our embedder in a fast and type-safe way.

Before you can use pybridge, you need to install it:

npm install pybridge

npm install --save-dev @deepkit/type-compilerThe @deepkit/type-compiler package is a peer dependency of pybridge and needs to be installed as well.

To get the serialization and deserialization of data between TypeScript and Python right, we need to

enable TypeScript runtime types reflection in tsconfig.json:

{

"compilerOptions": {

// ...

},

"reflection": true

}Now, let's see how we can use pybridge to interact with our Python embedder.

import { PyBridge } from 'pybridge';

const pythonCode = `

from sentence_transformers import SentenceTransformer

import nltk

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

embedder = SentenceTransformer('all-MiniLM-L6-v2')

def embed(texts):

return embedder.encode(texts).tolist()

def prepare(text):

sentences = sent_tokenize(text)

# strip all white-space from the sentences and remove empty sentences

return [s.strip() for s in sentences if len(s) > 0]

`;

export interface PythonAPI {

embed(text: string[]): number[][];

prepare(text: string): string[];

}

const bridge = new PyBridge({ python: 'python', cwd: process.cwd() });

;

const pythonApi = this.bridge.controller<PythonAPI>(pythonCode);There is a lot to unpack here. First, we import the PyBridge class from the pybridge library. Then we define a string with the Python code we want to execute. This code is the same code we used in the Python example above. These Python functions embed and prepare will be made available to us in TypeScript. We have defined an interface that mirrors the Python functions.

This works under the hood by starting a Python process and executing the Python code. The Python code is executed in a separate process, so it's not the same process as our TypeScript code. The process is kept alive until we call bridge.close().

The PythonAPI interface is used to type the pythonApi variable. The pythonApi controller instance is an object that has the same functions as the Python code, but returns always a Promise. We can now use this pythonApi to call the Python functions from TypeScript.

const sentences = await pythonApi.prepare('This is a sentence. This is another sentence.');

// ['This is a sentence.', 'This is another sentence.']

const embeddings = await pythonApi.embed(sentences);

// [[...], [...]]Database ORM

In order to not write custom SQL all the time, we will use an ORM (Object-Relational Mapper) to interact with the database. There are many ORMs out there, but we will use Deepkit ORM because it's very fast, easy to use, and has a lot of features.

import { AutoIncrement, Postgres, PrimaryKey } from '@deepkit/type';

export class Sentence {

id: number & PrimaryKey & AutoIncrement = 0;

sentence: string = '';

embedding?: number[] & Postgres<{ type: 'vector(384)' }>;

}This is our model class. It's a simple class with three properties. The id property is the primary key and is auto-incremented. The sentence property stores the original sentence, and the embedding property stores the embedding. Note that the embedding property is an array of numbers in TypeScript. This is because the vector data type is an array of numbers. The vector data type is provided by the pgvector extension.

Now that we have our model class, we can create a database connection and interact with the database.

import { Database } from '@deepkit/orm';

import { PostgresDatabaseAdapter } from '@deepkit/postgres';

const adapter = new PostgresDatabaseAdapter({

host: 'localhost', database: 'postgres',

user: 'postgres', password: 'postgres',

});

const database = new Database(adapter, [Sentence]);

await database.migrate(); //creates the table if not existsNext, we can bring everything together and create a function that takes a sentence and stores it in the database.

async function storeSentence(sentence: string) {

const sentences = await pythonApi.prepare(sentence);

const embeddings = await pythonApi.embed(sentences);

const sentencesToStore: Sentence = [];

for (let i = 0; i < sentences.length; i++) {

const s = new Sentence;

s.sentence = sentences[i];

s.embedding = embeddings[i];

sentencesToStore.push(s);

}

await database.persist(...sentencesToStore);

}This function first calls the Python API to prepare the sentence and then encode it as an embedding. Then it creates a new Sentence instance for each sentence and stores it in the database.

And like that, we have a function that stores a sentence and its embedding in the database. We can now call this function from our web application whenever we want to store a new sentence.

Querying the Database

Now that we have stored our sentences in the database, we can use the pgvector extension to search for similar sentences. We will use the new <=> operator to calculate the cosine similarity between two vectors.

async function searchSimilar(sentence: string) {

const embeddings = await api.embed([sentence]);

const vector = JSON.stringify(embeddings[0]);

const similarSentences = await database.raw(sql`

SELECT

id, sentence,

1 - (embedding <=> ${vector}::vector) as score

FROM sentences

ORDER BY embedding <=> ${vector}::vector

LIMIT 5

`).find();

return similarSentences;

}Lots of things are happening here. The most important is that we place the actual comparison of embeddings using the <=> in the ORDER BY clause. You might be wondering why we do not have a ORDER BY ... DESC here since we've learned that the cosine similarity is in the range [-1, 1], and we want to have the most similar sentences first (i.e. the highest cosine similarity score).

The reason is that the <=> operator returns a cosine distance, not a cosine similarity score. The cosine distance is in the range [0, 2], where 0 means the vectors are identical and 2 means they are as different as possible. So basically inverted compared to the cosine similarity score. The reason pgvector returns for its <=> operator a cosine distance and not a cosine similarity score is a technical limitation of Postgres.

cosine_distance = 1 - cosine_similarity

cosine_similarity = 1 - cosine_distanceIn order to bring the distance back to a cosine similarity score, we do 1 - (a <=> b), so that we have the in the score column same meaning as in the Python example, a cosine similarity score in the range [-1, 1].

Example

Next, let us try these new functions in an example.

const sentences = [

'A bird is singing in the tree.',

'The boy is riding a bicycle.',

'A woman is painting a picture.',

'A cat is chasing a mouse.',

'A group of friends are playing soccer.',

'Two children are building sandcastles on the beach.',

'A dog is barking loudly in the park.',

'A chef is preparing a delicious meal.',

'A dolphin is jumping out of the water.',

'The sun is setting over the mountains.'

];

async function app() {

for (const sentence of sentences) {

await storeSentence(sentence);

}

}

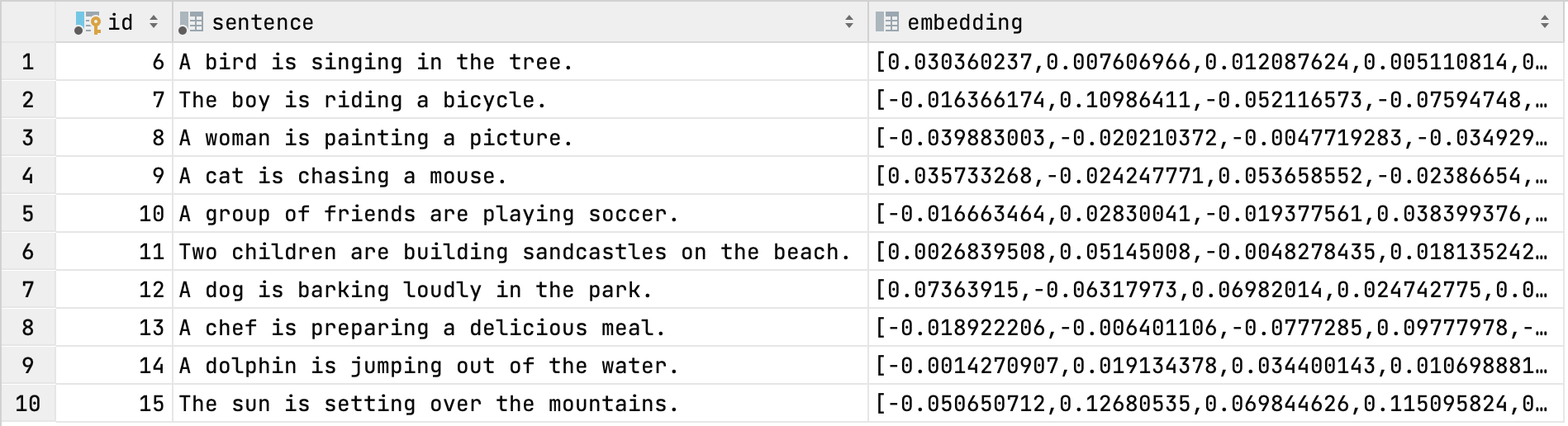

app();This code will store all the sentences in the database. Let's see how the table looks now in the database.

Now that we have content in our table we can search for similar sentences.

const query = 'Human riding a bicycle';

async function app() {

const similarSentences = await searchSimilar(query);

console.log('Similar sentences for', query);

for (const sentence of similarSentences) {

console.log(` ${sentence.score}: ${sentence.sentence}`);

}

}

app();which will print

Similar sentences for Human riding a bicycle

0.6459270516037506: The boy is riding a bicycle.

0.11862002085922119: The sun is setting over the mountains.

0.05951275756313745: A woman is painting a picture.

0.05745604977070862: A chef is preparing a delicious meal.

0.02242597637226773: A group of friends are playing soccer.Done. We have successfully implemented a semantic search engine using Machine Learning, TypeScript, and Postgres (and a bit Python).

Real-World Use Cases

A quick word on real-world applications using semantic search. In the example above we have a very simple table called sentences with only three columns id, sentence, embedding.

In more complex scenarios where you want to for example make multiple products, multiple documents, etc. searchable, you would add a product_id, document_id, etc. column to the table. This way you can store the embeddings of multiple products, documents, etc. in the same table and search for similar products, documents, etc. in the same table.

Having this additional reference information at the table level is very useful because it allows you to filter the results by the product, document, etc. that you are interested in. For example, if you have a product catalog with millions of products, you can use semantic search to find similar products to a given product. But you can also filter the results by the product category, price range, etc. that you are interested in. Also, for all the results you get back, you can easily retrieve the product information from the database that belongs to the result.

The more complex the data you want to make searchable, the more work you have to invest in preparing the database structure to make sure you have all information alongside the embeddings to make the results useful.

Conclusion

In this article, we have learned how to implement a semantic search engine using Machine Learning, TypeScript, and Postgres. We have seen how to use the pgvector extension to store embeddings in Postgres and how to use the <=> operator to calculate the cosine similarity between two vectors. We have also seen how to make use of machine learning models written in Python from TypeScript using the pybridge library.

There is still a lot to learn and this barely scratches the surface of what is possible. If you want to learn more about semantic search, I recommend you to check out the excellent site SentenceTransformers at sbert.net. It covers besides similarity search a lot of other use cases as well and shows several basic ways how to fine-tune the models to your specific use case.

Code

The code above is available on GitHub at github.com/marcj/typescript-semantic-search. It contains the full code for the example above and also a CLI tool to interact with the functions we've used above.